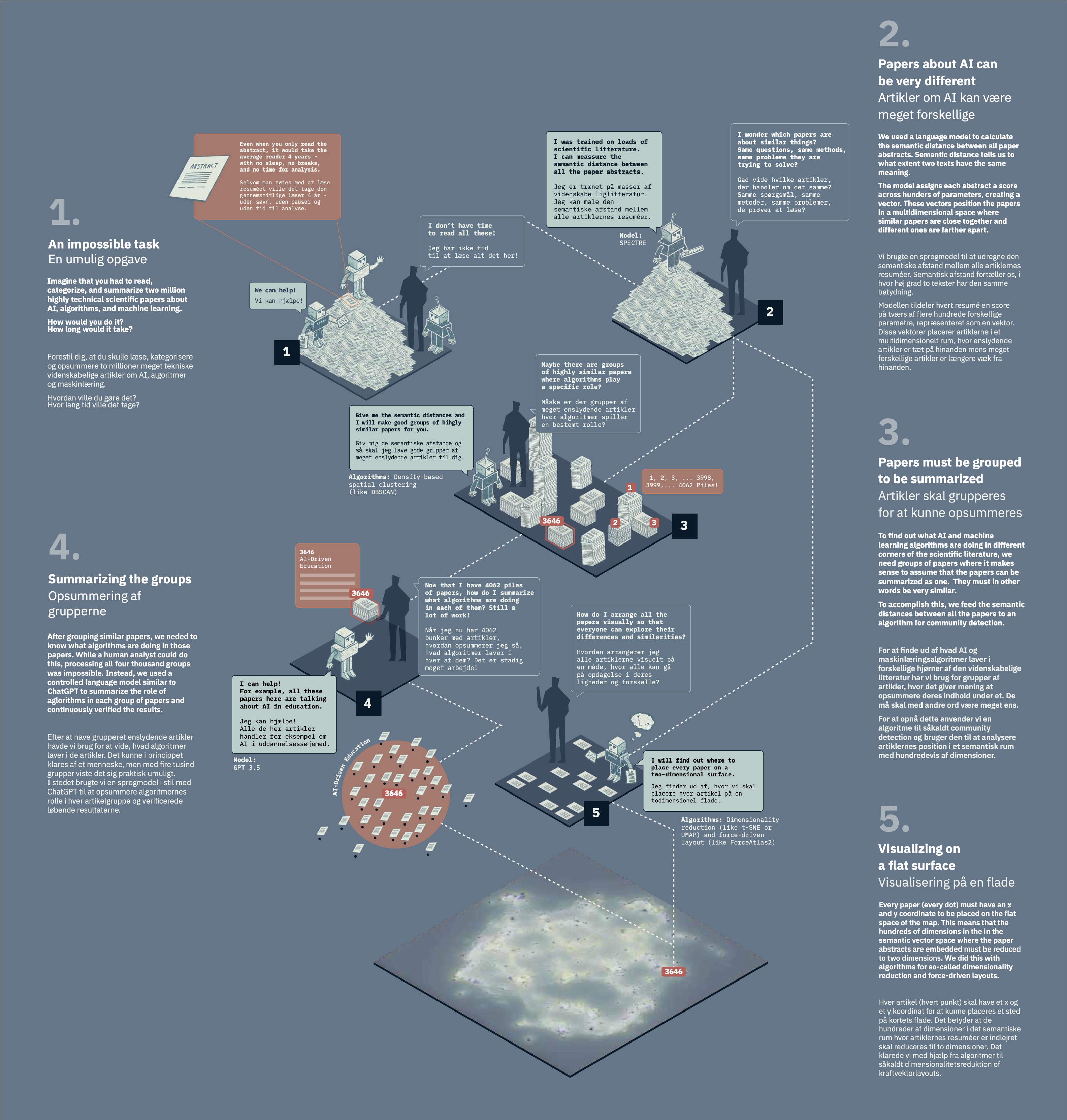

Methodology

A MAP MADE WITH ARTIFICIAL INTELLIGENCE

This map would not have been possible without the assistance of generative AI and a range of different algorithms. If you explore the map and look for places like “language processing”, “text clustering algorithms”, “word embedding advancements”, or “text summarization advancements”, you can find the methods we use.

DO YOU TRUST THIS MAP?

AI is not infallible. The same is true for the map of AI. The language models we have used to classify and analyse the two million scientific papers are the same technology we know from chatbots and generative AI. By default, this means that we cannot trust them. Language models are good at putting words together in ways that make them sound human. They do so based on probabilities, but they do not possess what we would call knowledge of the topic they are writing about.

For example, when we ask a language model to summarize which role algorithms play in a group of scientific papers, we cannot simply provide the titles of those papers and expect it to know the answer. The result would likely be a text that sounded plausible and had all the outward trappings of a summary, but with high risk of so-called hallucinations. Since the language model is not a lexicon and does not have access to a knowledge database, it cannot fact check its own results. It can simply provide a combination of words that could likely have been used in the context but still easily include faulty information.

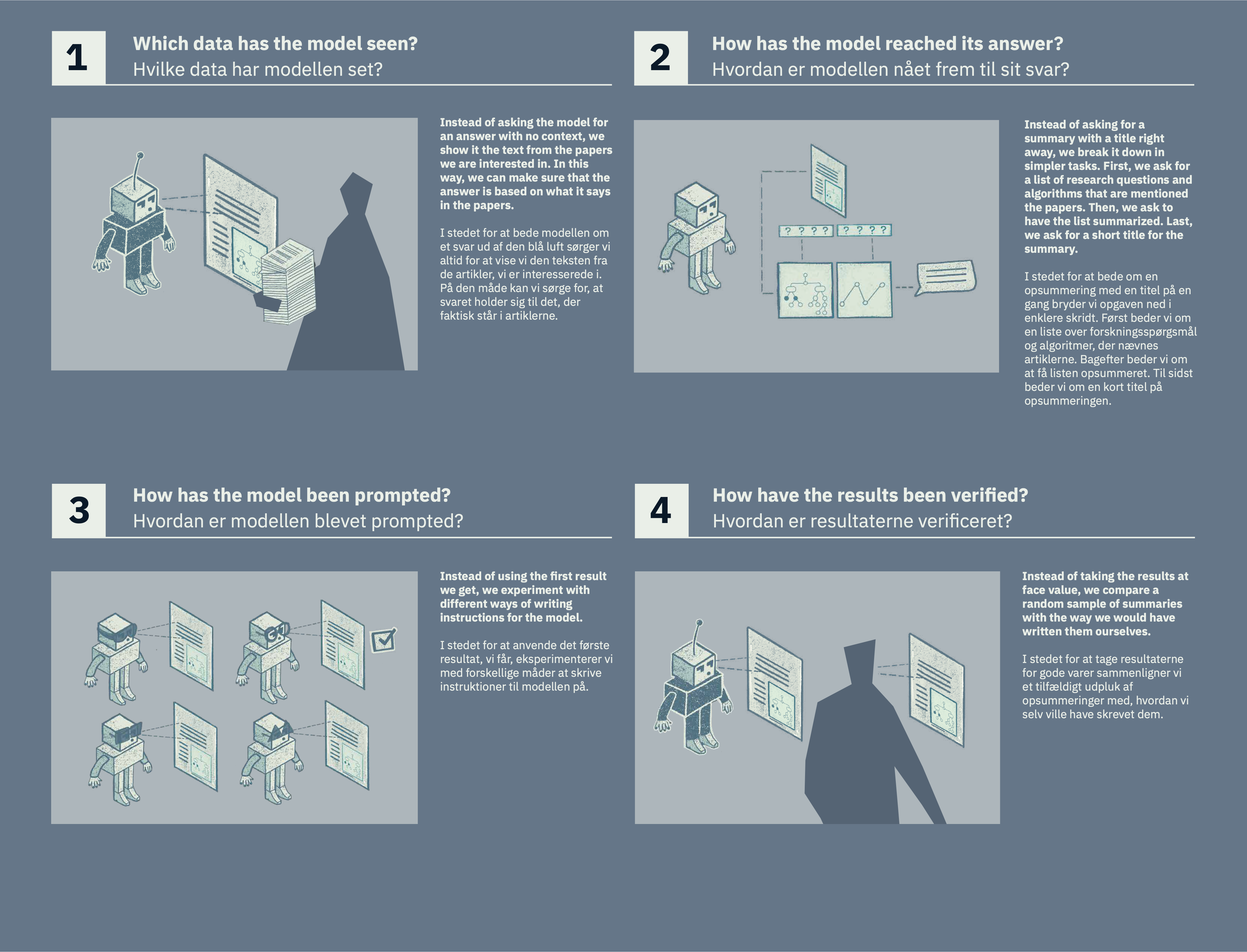

Instead, we take a series of precautions to be as sure as possible that the results are trustworthy: